What Is Bot Traffic And How To Stop Traffic Bots?

Bots are an inevitable element of today's digital world, making up almost half of web traffic.

According to the Imperva 2022 Report, over 40% of internet traffic comprises non-human behavior or activities. It includes bot traffic programs ranging from authentic crawlers to corrupt software. The report highlighted that bad bot traffic is almost double that of good bots.

Bots crawl all over the internet and affect websites in multiple ways. Some bots are used by website owners for their benefit, while others are malicious that are to be prevented at all costs.

Before we move forward to the different types of bots and methods of stopping bot traffic, let us learn about bots and bot traffic.

What are Bots and Bot Traffic?

Bots are commonly used short for Robots, also known as Internet bots. These are computer programs designed to automate human behavior. Bots are programmed to simulate human activity and imitate the same without external input. They can quickly execute mundane and repetitive tasks swiftly and efficiently, making them a practical option.

The term bot traffic refers to the non-human traffic on your website or app. Although bot traffic is quite normal on the internet, it usually has a negative connotation. But that does not eliminate the presence of good bots. It depends on the creator's intention for which purpose they build the bot.

In this digital era, bots are evident in almost every sphere and are used for multiple purposes. There are essential search engine bots and digital assistants like Alex and Siri, which are considered good bots.

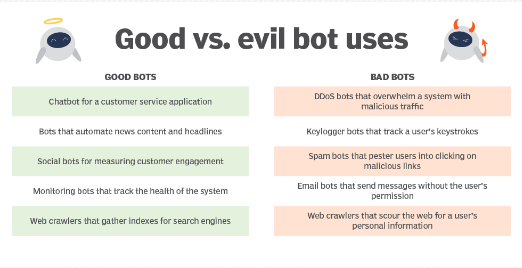

On the other hand, malicious bots are used for DDoS attacks, data scraping, and other nefarious activities. Thus, there are two different bot activities, good and bad. Here is more detail about each category.

What is Good Bot Traffic?

As per their name, the 'good' bots cause no harm to your website. This non-human traffic lets you know about their presence and activity on your page. Many people use these advanced bots to ease their routine work. Such automated bots handle a lot of repetitive burdens for website owners and help them manage multiple tasks quickly and efficiently.

Although internet bots are infamous for their malpractices, some legitimate bots aid website traffic instead of causing trouble. Some of the famous 'good' bots include:

Search engine bots

These are the essential search engine crawlers that help discover content on the web. When you search for something, the bots crawl your website to locate content and serve the required information. Thus, having these bots is good traffic for your website.

Commercial bots

These are the bots used by commercial companies for their interests. They send these bots to crawl the internet and collect the necessary information.

For example, online advertising networks use commercial bots to optimize display ads; research companies use research commercial bots to monitor market news, and so on.

SEO crawlers

Those familiar with SEO must have used tools like Ahrefs and Semrush for keyword searches and other tasks. These tools also send bots to crawl the web and gather information per your requirements.

Monitoring bots

These sophisticated bots help monitor website metrics like uptime, bounce rate, etc. They keep you updated about the server status and website by periodically checking and reporting different data. This helps you keep track of your website and act in case of any malfunction.

Copyright bots

These bots scan the web to ensure that your content or images are not illegally stolen. Tracking a stolen image on such a vast web is quite challenging. Thus, bots help in automating the task and safeguarding your copyrighted content.

What is Bad Bot Traffic?

Spam bots are a common sight on several websites. These are the bad bots created with malignant intentions, unlike genuine bots. They are often disguised as non-sense comments or bots that buy out all the good seats of a concert. They can also be in the form of atrocious advertisements and irrelevant backlinks.

These malicious bots are the reason for the bad reputation of internet bots. They are present in a significant amount all over the web and create troubles for users. Some prominent malicious bot traffic to avoid are:

Scrapers

These annoying bad bots scrape websites and download every valuable information they can get, including content, files, images/videos, etc. The website owners who use this bot re-use the stolen data on their website without permission. Scrapers also harvest email addresses and other contact information to send malicious emails to them.

Spam bots

Sometimes, you find bizarre comments on your email or blog by a random stranger. Spam bots do this. They have multiple faces and can be witnessed as form-filling bots that fill out contact forms on websites, spam traffic bots that spam your website with ads and other inappropriate links, etc.

DDoS bots

These are among the oldest and most fatal bots. DDoS refers to Distributed Denial of Service bots. Various networks of infected devices like Botnets are used to perform DDoS attacks.

When a DDoS bot is installed on a PC, they target a specific website or server to bring it down. These bad bots damage those sites financially by affecting their speed and performance. As a result, they bring the entire website offline.

Click fraud bots

Various site owners are charged high advertising fees because of these malicious bots. The ad fraud bots click on pay-per-click (PPC) ads to generate additional revenue. The bot clicks the ad in disguise for legitimate users. This bot activity results in an exceeded ad budget as they trigger fake ad clicks on the website.

Brute force attack bots

The bot traffic on your website forces itself into the server. These bots enter your website masked as genuine users and intend to steal sensitive data. These malicious bots report back to their operator, who then sells or uses the information for their benefit.

How to Detect Bot Traffic?

Search engine crawler bots are rapidly gaining control over the web. While some of them are useful, the malicious bot traffic hurt analytics data of your website by affecting the site metrics.

Thus, it is important to identify bot traffic on your website and eliminate this unwanted traffic. Web engineers and providers like VPS Server can directly look at network requests to their website to identify bot traffic.

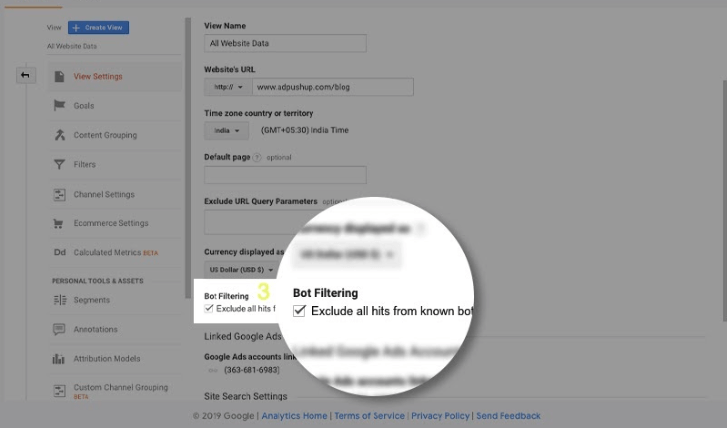

Google Analytics is one of the best tools to detect bot traffic on a website. Your Google analytics account contains a 'Bot Filtering' checkbox which helps filter bot traffic on the server. The following anomalies of Google analytics data help in detecting bot traffic by indicating the existence of website traffic bots:

Unusual page visits from a single IP address

A publicly open website must have visits from multiple IP addresses depending on its location, customer base, and other factors. However, if it attracts unusually high traffic from an unexpected source or a single IP address, that is a clear sign of bot traffic.

The location of the IP address can also be a judging factor, as a website with Japanese content having a high volume of visits from a Russia-based IP address is a red flag.

Unexpectedly high bounce rate

Bounce rate refers to the time a visitor spends on your page. It is the percentage of web traffic to a website that bounces off or leaves the page within a few seconds. The analytics data stating the bounce rate of a website can also be an indicator of bot traffic.

Any anomalous patterns in this metric, such as a sudden jump or drop in bounce rate percentage without a reasonable explanation, could be the work of bots.

A sudden surge in page views

An unexpected hike in the number of page views, even if they look reasonable, can be a point of concern. Sometimes, your website is viewed by natural-looking visitors from different locations and IP addresses, but it is bot traffic. Checking Google analytics data can help you ascertain whether the sudden spike in traffic is normal or because of some distributed denial of service or DDoS attack.

Fake conversions

Some bots are designed to surge phony conversions on a website. These include inventory hoarding bots, which generate fake carts on an e-commerce website without checking out any items or spike newsletter subscriptions by submitting fake forms.

These bots create fake accounts using bizarre names, email addresses, and contact information. An unforeseen spike in conversions without any specific reason can indicate fake traffic to a website and junk conversions created by these bots.

Social media 'referrer'

Bot traffic can also crawl your social media pages. Referral traffic bots imitate the usual referrer, which tells the source of new traffic to a website. These can also look like real users but are bot traffic.

Some other signs to detect bots infecting your system are:

-

Frequent crashes or software glitches.

-

Unreasonable factors cause slower page loading or internet connection speed.

-

Google or other search engines show features that the user did not install.

-

Unknown pop-ups show up, and programs run without the users' knowledge.

How to Stop Bot Traffic?

Once you know how to identify bot traffic, you must figure out ways to stop bot attacks on your system. Although the crucial need is to stop bad bot traffic from harming your website or server, you must also manage bot traffic from good and commercial bots. Good bots are not always helpful for your site as they can strain the internet traffic and affect site performance. Moreover, managing bot traffic can help separate good and bad bots.

Likewise, the unauthorized bot traffic to a website also varies. The same malicious bot infects not every site. There are different reasons and methods through which bad bot attack a website, so there is no standard bot management solution that fits all.

You can take proactive measures to stop malicious bots from infecting your system. Here are some bot detection tools and steps to manage good and bad bots.

How to stop bad bots?

You can do several things to stop bad bots from straining your system. Some of them are:

Block the source

One of the most basic ways to stop unwanted bot traffic is by blocking the source of the internet traffic. It could be an individual visitor or an entire range of IP addresses reflecting irregular traffic. You can also utilize bot management solutions from various providers to stop bot traffic. They detect malicious bots and block them using AI and machine learning before they can cause any harm.

Monitoring bots

By monitoring them, you better understand malicious bots and their abnormal activities on your site. Create a baseline of usual human behavior which you can use to look for and compare any irregular activities on the site. Fix automated responses that will trigger an alarm and block visitors who exceed the human threshold.

Install security plugin

Another way to stop bad bot traffic is installing a security plugin. If your website is built on WordPress, you can install security plugins like Wordfence or Sucuri Security. These are managed by companies with security researchers constantly monitoring and patching issues.

While some security plugins have automated features to block bots, others allow you to see the source of unusual traffic and decide how to deal with such internet traffic.

How to stop good bots?

Sometimes, it becomes essential to control good bots to maintain bot traffic on the website. Some steps that can help manage them are:

Blocking bots that are not useful

Not all 'good' bots will be useful for your website. You need to decide if these bots are of any benefit to your site. For instance, apart from Google, other search engine bots often crawl your website hundreds of times a day, but they do not bring many visitors. So, it is wiser to block bot traffic from those search engines.

Limit the bot's crawl rate

Limiting the crawl rate restricts bots from repeatedly coming back or crawling over the same links. Using Crawl-delay in robots.txt, you can adjust the crawl rate of various bots. You can assign a particular delay rate for different crawlers. Unfortunately, Google does not support crawl delay. But you can use this feature for other search engines.

Block and allow lists

Once you have a proper bot detection and management solution, you must set up a block and allow list. The 'allow list' contains the good bots you permit to roam around your website. These are the bots that you're entirely sure would be beneficial for your site. You can also manage good bot traffic using timeboxing or rate-limiting features to allow access per your own terms.

Conclusion

For any website, the amount of bot traffic describes the non-human traffic it experiences. Although bot traffic has its own perks, websites need to identify good and bad bot traffic to manage site performance. Hiring services of an efficient web host like VPS Server can assist in detecting the different types of bot traffic on the website.

There are many signs to check for malicious bot traffic harming your website or server. Once you get a hold of the internet bot visiting your website, you can decide whether it is an abusive bot traffic or a useful one. Consequently, you can determine what should be done with it.

Dealing with bot traffic is relatively easy if you know the proper measures. Google Analytics is one such tool that can help you manage bot traffic. In addition, investing in a certified bot management solution is another means to manage bot traffic and save your website from damaging risks.

Frequently Asked Questions

What is Bot Traffic?

Bot Traffic is web traffic from automated robots or computer programs designed to crawl or index web pages, generally for search engine optimization purposes.

Why Is Bot Traffic Detrimental?

Bot Traffic can consume server resources, and in some cases, affect website content, rankings, or performance. It can also contain malicious code, which can cause security issues and other problems.

How Do I Block Bot Traffic?

Blocking Bot Traffic can be done by using IP Blacklisting, which prevents access to your website from specific IP addresses. Additionally, you can use the Network Security Service to detect and block malicious or unauthorized bot activity.

What Is Bot Traffic Tracking?

Bot Traffic Tracking is the process of monitoring and analyzing Bot Traffic to identify malicious activities and detect potential issues related to content, rankings, or performance.

What Is VPS Server Bot Traffic Tracking?

VPS Server Bot Traffic Tracking is a specialized form of Bot Traffic Tracking tailored specifically to VPS servers. It monitors and analyzes traffic from Bots in order to ensure maximum security, performance, and uptime.

Is There a Way to Filter Bot Traffic Visiting My Site?

You can use the Network Security Service to filter incoming Bot Traffic. This will help prevent malicious activity or unauthorized Bot activity from impacting your website. Cloudflare is also a good option to use.

What Is the Difference Between Bot Traffic and Human Traffic?

The primary difference between Bot Traffic and Human Traffic is that Bot Traffic is driven by automated robots or computer programs, while Human Traffic is driven by real people visiting your website.

.webp)